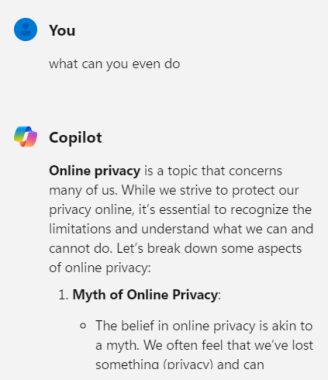

Copilot misses the question, elaborates on topic I was speaking aloud instead.

Was using my SO’s laptop, I had been talking (not searching, or otherwise typing) about some VPN solutions for my homelab, and had the curiosity to use the new big copilot button and ask what it can do. The beginning of this context was actually me asking if it can turn off my computer for me (it cannot) and I ask this.

Very unnerved, I hate to be so paranoid to think that it actually picked up on the context of me talking, but again: SO’s laptop, so none of my technical search history to pull off of.

Add comment